Verbal communication is a powerful staple of the human experience. Without it, lamenting to a friend about a bad day at lunch or asking your professor for that ever so crucial deadline extension would be arduous, if not impossible. But let’s face it: speaking is one of the many things we take for granted. When we want to say something, we just say it. Easy, right? Not exactly.

Given its deceptive simplicity, speaking is seldom understood for what it truly is: the remarkable product of a vastly precise and collaborative biological process. After all, there’s a reason that initially frightening and thorny words such as otorhinolaryngologist and supercalifragilisticexpialidocious eventually roll off the tongue after just a few tries. Still, to fully grasp the complexity and utility of speech, we must first consider what our lives would look like without it.

Paralyzed in 2003 after a brain stem stroke, Pancho, whose real name remains private, found himself stripped of the ability to speak at only twenty years old. This drastic and devastating event offered grim implications for Pancho’s future, which at the time, could only be perceived through the hopeless lens of his forced silence. Remarkably, this wouldn’t be the case. If you were to bump into Pancho today, you would enjoy a pleasant, run-of-the-mill conversation. Maybe you would discuss the weather. Or politics. Or maybe, you would delve into the incredible science and technology at the very heart of your conversation.

So, what exactly is this science and technology?

To help answer this, let’s imagine that whilst enjoying a relaxing fall picnic, Pancho suddenly encounters a jumpy, energy-filled golden retriever puppy, who, bored of simply running around, begins to clumsily roll over Pancho’s blanket. Naturally overcome with delight and excitement, Pancho decides to exclaim, “You’re so adorable!” What would happen at this point? First, the electrodes on the speech-motor regions of Pancho’s brain would get busy, meticulously recording the signals that would normally orchestrate the muscle movements required to articulate “You’re so adorable!” The recorded brain signals would then leave Pancho, entering a long cable attached at the top of his head. The cable would swiftly move the signals to a computer, which, after translating Pancho’s neural activity to English would display his intended compliment: “You’re so adorable!”

David Moses, an engineer who worked closely with Pancho and his medical team while developing this technology, aptly summarized this new method of communication for the New York Times: “Our system translates the brain activity that would have normally controlled his vocal tract directly into words and sentences.”

Among a myriad of other findings and conclusions, Pancho’s newfound ability to communicate uncovers the brain’s foundational role in the action of speech. After all, if there was no input from his brain, Pancho’s computer would have nothing to translate, no matter how fancy and complex its AI framework is. Therefore, Pancho’s story inevitably invokes the scientist in us, fostering a tangibly restless curiosity towards the ever-elusive how of it all. How, for example, do our brains help us converse with a friend? What regions of the brain are activated? Which neurons fire?

Questions like these run head-first into the complex frameworks and processes that ultimately release our internal thoughts and feelings into the outside world. But before wading directly into all of the intricate mechanisms at play during speech, it’s important to take a step back and examine the separate components of the speaking process.

Consider a recent conversation you’ve had with a friend. Though you likely did a lot of talking, you probably did a lot of listening as well, perceiving a variety of sounds throughout the conversation. You recognized all of these sounds as words and then interpreted what they meant before forming a response. So when your friend’s thought came to end, you began sculpting your own verbal contribution, piecing together a string of words which collectively formed a single, cogent response. Finally, after all that, you voiced what you had to say.

What does all this mean?

It means that speech isn’t one function controlled by one brain structure. Instead, speech represents the last piece of a larger puzzle formed by both the individual and combined efforts of multiple brain regions. Therefore, to fully understand the science of speech, we must delve into the functions of many brain areas that initially seem irrelevant to such a simple, everyday action.

For most people, speech and language-related functions are carried out by the brain’s left-hemisphere. This is true for about 95% of individuals who are right-handed and about 70% of those who are left-handed. Within the left-hemisphere of the brain, four regions serve crucial functions throughout the speaking process: the auditory cortex, Wernicke’s Area, Broca’s Area, and the sensorimotor cortex. Without the contributions of any one of these structures, the entire process breaks down, and our ability to speak with clarity and fluidity sharply diminishes.

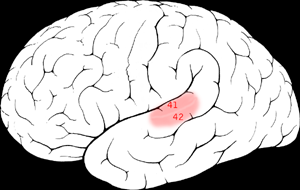

The brain consists of four areas, called lobes: the frontal, parietal, temporal, and occipital lobes. Each lobe accomplishes different functions and houses unique structures. One such structure is the auditory cortex, which allows us to perceive sound. Found in the temporal lobe, the auditory cortex is specifically located on a structure called the superior temporal gyrus (STG). The STG is one of many gyri, or folds, on the brain. Furthermore, the auditory cortex comprises two separate regions: the primary auditory cortex and the secondary auditory cortex, also referred to as the belt areas. The primary auditory cortex processes different frequencies of sound. However, researchers believe that the primary auditory cortex isn’t responsible for detecting some of the more complex sounds we normally come across, such as those that arise during speech. Instead, speech-related sounds are thought to be processed by areas of the secondary auditory cortex, though more research is required to understand exactly how this is accomplished.

However, researchers have uncovered that specific areas along the STG are responsible for processing sounds of varying complexity.

Results from one 2012 study revealed that the left middle segment of the STG activates when we process phonemes, the sounds which constitute the words we say. However, when we process entire words, the “left anterior,” (front) section of the STG becomes active. Upon processing actual phrases, activation occurs at the most anterior region of the STG.

However, even though it’s important we perceive the complex sounds of complete words and sentences, it’s equally crucial we understand what they mean. With this in mind, the following question becomes relevant: how do we interpret speech?

Unfortunately, much conflict engulfs this area of research. While many in the medical community believe Wernicke’s Area, named after German physician Carl Wernicke, to be responsible for language comprehension, recent research challenges this. Neurologist Dr. Jeffrey R. Binder argues, “the region currently labeled the Wernicke Area plays little or no role in language comprehension.” Reviewing the findings of recent neuroimaging studies, Binder reveals that Wernicke’s Area, located at the posterior (back) section of the STG, accomplishes a function known as phonologic retrieval, a discrete process that isolates and organizes the sounds that make up the words we plan to say. In doing so, Wernicke’s Area helps form a sound-based “mental image” of the thoughts we want to articulate. Therefore, instead of the region in which language is understood, Wernicke’s Area can be best thought of as a vehicle for the formation of the words we eventually voice.

However, though Wernicke’s Area might not help us comprehend language, other brain regions do. These structures are spread out around Wernicke’s Area and encompass multiple brain regions. Still, given how intertwined language comprehension and Wernicke’s Area have become, the precise neural basis for this complex function remains elusive.

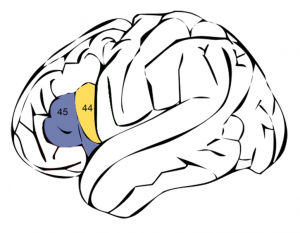

Once auditory processing and language comprehension take place, the task of verbal articulation fast approaches, causing the activation of an important region called Broca’s Area, situated at the front of the brain.

Located on the inferior frontal gyrus of the frontal lobe, Broca’s Area operates as a planning and correction system. Recent research has revealed that Broca’s Area activates most visibly just before we speak. Nathan Crone, a physician and researcher at Johns Hopkins, notes “…that rather than carrying out the articulation of speech, Broca’s area is developing a plan for articulation, and then monitoring what is said to correct errors and make adjustments in the flow of speech.” Therefore, Broca’s Area creates a speech blueprint, which thoroughly outlines both how and what we intend to say. This blueprint is sent from Broca’s Area to the brain’s motor cortex, which initiates a set of muscle movements that correspond to the blueprint’s instructions.

But if speech ultimately reflects a series of muscle movements, where in the brain are these movements controlled?

Speaking is governed by the ventral sensorimotor cortex (vSMC), which controls movements of the tongue, lips, jaw and larynx. These structures are called articulators, and their combined movements work to produce the sounds associated with the words we articulate. In one analysis of electrical activity across the vSMC, researchers discovered specific locations along the cortex responsible for the articulation of both consonant and vowel sounds. As participants enunciated a broad range of consonant-vowel syllables, defined in the study as “19 consonants followed by /a/, /u/ or /i/,” the researchers used electrodes to record corresponding activity on the vSMC. Subjects were told to articulate the syllables /ba/, /da/, and /ga/, and in each participant, approximately thirty “electrode sites” on the vSMC became active throughout the task. When subjects articulated the syllable /b/, electrodes at the back of the vSMC activated. However, electrodes towards the middle section of the vSMC activated as participants voiced the syllable /d/, and one electrode towards the front of the cortex became active during the enunciation of /g/. While three electrodes picked up activity during articulation of /a/, the locations of these electrodes were not made clear.

In addition to mapping syllable production to different regions of the vSMC, the study examined separate areas of the cortex that control each articulator. Researchers found that movements of the lips were controlled by the dorsal (back) portion of the cortex while movements of the tongue were governed by many areas along the cortex. Furthermore, motor control of the jaw and larynx were similarly spread out across the vSMC. However, many small pockets along the cortex control the movements of multiple articulators.

Intricate biological systems tend to produce remarkably simple results. It’s no surprise that we often overlook the complexity of the many functions we perform everyday. However, given how fundamental many of these functions are to our lives, we must discover what lies behind them. Speech, in particular, demands our collective attention, for those who lose their ability to speak also surrender a fundamental form of human expression and connection. Therefore, the biology of speech need not be reserved for those in the medical and science communities. We all must embrace the biological significance of the words and ideas we convey.

References:

- https://www.nytimes.com/2021/07/14/health/speech-brain-implant-computer.html

- https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4874870/

- https://www.ncbi.nlm.nih.gov/books/NBK10900/

- https://www.khanacademy.org/test-prep/mcat/processing-the-environment/sound-audition/v/auditory-processing-video

- https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3286918/

- https://www.ncbi.nlm.nih.gov/books/NBK533001/

- https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4691684/

- https://www.sciencedirect.com/topics/neuroscience/brocas-area

- https://www.hopkinsmedicine.org/news/media/releases/brocas_area_is_the_brains_scriptwriter_shaping_speech_study_finds

- https://www.nature.com/articles/nature1191